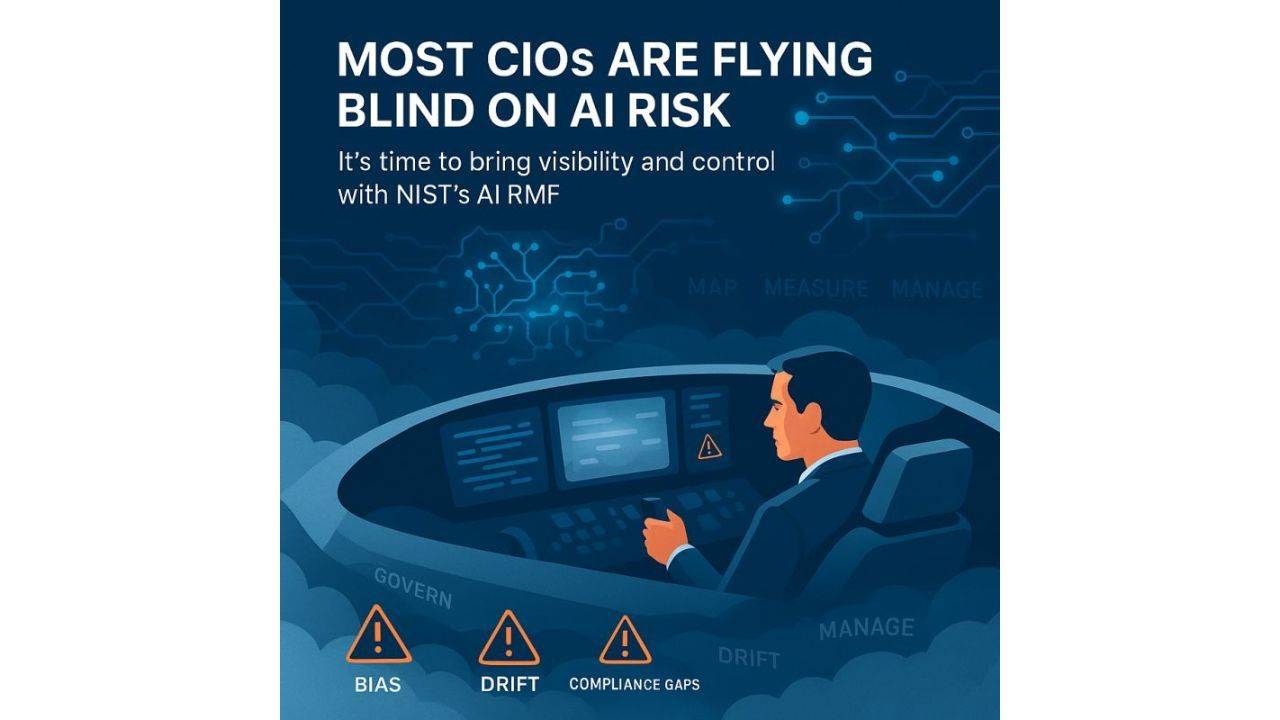

𝐌𝐨𝐬𝐭 𝐂𝐈𝐎𝐬 𝐚𝐫𝐞 𝐟𝐥𝐲𝐢𝐧𝐠 𝐛𝐥𝐢𝐧𝐝 𝐨𝐧 𝐀𝐈 𝐫𝐢𝐬𝐤

Here's the framework that changed how I think about governance. 🎯

Last month, I watched a major enterprise halt its AI deployment 48 hours before launch.

𝐖𝐡𝐲? They couldn't answer one simple question: "𝘞𝘩𝘢𝘵 𝘩𝘢𝘱𝘱𝘦𝘯𝘴 𝘪𝘧 𝘵𝘩𝘪𝘴 𝘨𝘰𝘦𝘴 𝘸𝘳𝘰𝘯𝘨?"

𝐓𝐡𝐞𝐲 𝐡𝐚𝐝 𝐭𝐡𝐞 𝐭𝐞𝐜𝐡𝐧𝐨𝐥𝐨𝐠𝐲. They had the budget. But they were missing the structure.

That's when I dove deep into NIST's AI Risk Management Framework and realized most organizations are skipping the fundamentals.

The framework breaks down into 4 critical functions:

🏛️ Govern: Who makes decisions? Who's accountable when AI fails?

🗺️ Map: What risks are hiding in your AI systems? (Bias, privacy violations, downstream harms you haven't considered)

📊 Measure: You can't manage what you can't measure. Define your metrics before deployment, not after.

⚙️ Manage: Turn identified risks into action. Allocate resources, build fallbacks, and track any oversights.

Here's what surprised me: these aren't linear steps. They're iterative. As your AI systems evolve, so must your governance. 🔄

The voluntary framework is becoming the de facto standard. Regulators are watching. Stakeholders are asking harder questions.

For CIOs: Which function is your weakest link right now: Govern, Map, Measure, or Manage? 💬

Take the FREE AI Governance Scorecard to see how you measure up.

We hate SPAM. We will never sell your information, for any reason.